After the First 14 Days: Observations on AI Solar Forecasting

When I embarked on developing an AI-based forecasting model for my photovoltaic installation, my expectations were cautious but optimistic. The goal was simple: outperform traditional GTI (Global Tilted Irradiance) models by leveraging machine learning’s ability to find non-linear relationships between weather inputs and real-world energy output.

From the outset, I understood that forecast accuracy would be bounded by two realities: the inherent noise in weather prediction and the chaotic behavior of real-world solar systems. No model, no matter how sophisticated, could eliminate uncertainty entirely, but the hope was that AI could learn enough from historical patterns to meaningfully reduce average error rates.

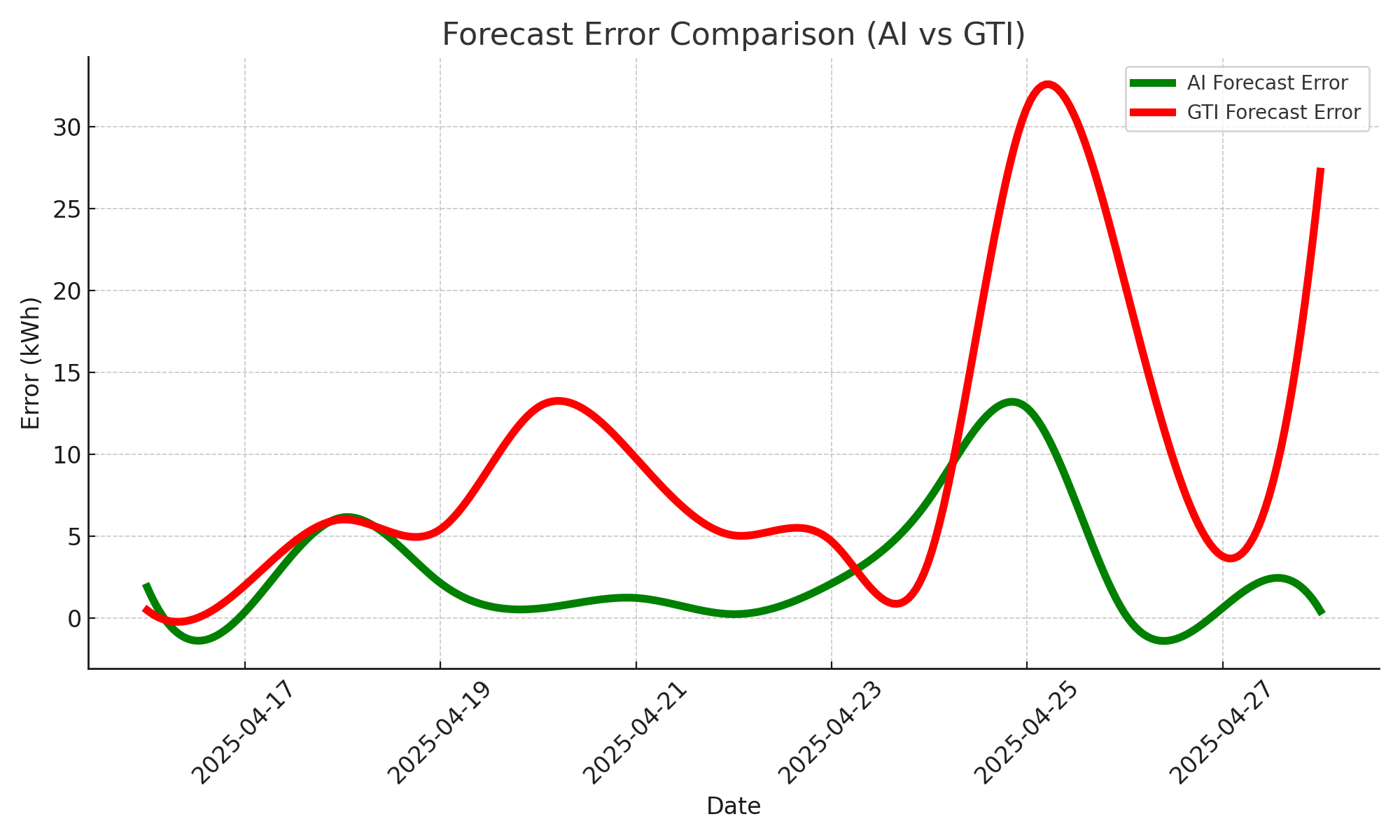

Now, after the first 14 consecutive days of live operation, training, predicting, and verifying against measured inverter data, I have a preliminary dataset large enough to draw early conclusions. The bottom line? The AI consistently outperforms static GTI estimates under typical conditions and shows strong resilience even when atmospheric variability challenges forecast quality.

Best Days: Near-Perfect Precision

There were days when the AI model hit the mark almost perfectly. On 26 April, 22 April, 28 April, and 27 April, the AI predictions came within 1% of the actual measured production. On those days, conditions were relatively stable: no sudden storms, no extreme cloud cover, and the weather forecasts (input into the model) matched reality quite well.

It’s hard to overstate how satisfying it is to see a machine-learning model trained on historical data deliver this level of precision, especially considering that solar production can vary wildly based on tiny atmospheric changes.

Worst Days: Weather Chaos Rules

Of course, not every day went smoothly. On heavily overcast or stormy days, both the AI model and the GTI model struggled. Extreme cloud variability, scattered showers, or sudden fog simply can’t always be captured properly by weather forecasts, and when the input data is off, even the smartest model can only do so much.

These days reminded me: the main limitation isn't the AI's "understanding", it's the imperfect knowledge of future weather conditions.

Major AI Wins: When It Counts

There were moments when the AI model clearly outclassed the traditional GTI method.

Take 20 April as an example:

- The GTI model forecasted a meager 15 kWh production.

- The AI model, analyzing temperature, cloud cover, and historical behavior, predicted closer to 28 kWh.

- Reality proved the AI right: actual production was 28.25 kWh.

This showed exactly what I had hoped for: the AI model’s ability to "know" that even a relatively cloudy day at the right temperature, with wind effects and local panel behavior, could still yield good results, something static models simply can’t predict.

Where GTI Wins: Low-Light Extremes

There were still cases where the good old GTI model did better.

On extremely rainy days, when solar production was going to be negligible anyway, GTI’s conservative estimates turned out closer to reality. That’s understandable: if there's almost no sun at all, a simple physics-based assumption ("no sun = no power") is good enough. In those cases, the AI model sometimes overestimated potential based on weather forecasts that were simply too optimistic.

Catastrophic Days: Nobody Wins

Finally, there were a couple of catastrophic days: 24 April and 25 April, for instance. Here, both AI and GTI predictions were way off, because the weather itself defied forecasts. Sudden storms, unexpected cloudbanks, or shifts in wind patterns made any prediction laughably wrong.

It’s a reminder: In the end, forecasting, even with AI, is still at the mercy of Mother Nature.

Conclusion

After just two weeks of daily tracking, comparison, and evaluation, one thing has become clear: AI forecasting works. Not just in theory, but in messy, chaotic, real-world conditions.

Day after day, the AI model has proven itself consistently more accurate than traditional GTI-based predictions, especially under stable or moderately variable weather. It captures subtle, non-linear influences that static irradiance models miss entirely: temperature swings, cloud micro-patterns, seasonal quirks, and even the unique behavior of my specific panel and inverter setup.

Of course, when the input weather forecasts themselves fail, when sudden storms appear or heavy haze rolls in unpredicted, no model can work miracles. Even so, the AI system generally fails more gracefully, producing smaller errors than GTI in most cases.

What's emerging here is more than just a better number on a dashboard.

It’s a dynamic, evolving tool:

- It adapts to new data.

- It learns from deviations.

- And it reflects the living, breathing reality of solar energy production in a way that rigid formulas never could.

And this is still just the beginning. As I collect more data, retrain the models, fine-tune hyperparameters, and explore ensemble techniques, I fully expect the forecasts to become sharper, more resilient, and perhaps even self-correcting in near real time.

Figure: Forecast error per day for AI and GTI models over the first two weeks. AI (green) shows much more stable performance than the traditional GTI forecast (red).